Very long sequences can exceed graphics card memory.

In that case, it's more efficient to create a single texture, and redefine it on the fly, than to use many separate textures.

Call glTexImage2D to re-load a texture image, any time the target texture is bound.

glTexSubImage2D allows one to load a portion of a texture.

For non-powers-of-two textures, create a larger texture (e.g. 1024 x 512), and sub-load the video data into it.

glTexSubImage2D(GL_TEXTURE_2D, 0, xoffset, yoffset,

width, height, format, type, pixels)

Texture coordinates will have to use smaller range (not 0 ... 1).

e.g. for a 640 pixel wide video in a 1024 pixel wide texture, S coordinate ranges from 0 to 0.625

Compressed textures use image data that is compressed, rather than a full width x height array of RGB values.

Compressed textures use image data that is compressed, rather than a full width x height array of RGB values.

This reduces the amount of memory needed.

Textures can also download faster.

glCompressedTexImage2DARB(GL_TEXTURE_2D, 0, format, xdim, ydim,

0, size, imageData)

|

Transformations - translation, rotation, and scaling - can be applied to texture coordinates, similar to how they are applied to geometry. The exact same OpenGL function calls are used. The only difference is that the matrix mode is changed to GL_TEXTURE.

glMatrixMode(GL_TEXTURE)

glTranslatef(0.1, 0.05, 0)

glRotatef(30.0, 0, 0, 1)

glMatrixMode(GL_MODELVIEW)

Setting the matrix mode to GL_TEXTURE means that any subsequent transformation calls will be applied to texture coordinates, rather than vertex coordinates. |

|

|

One trick that we can use a texture transformation for is to make sure that a texture is always applied at the same scale, as an object is transformed.

glMatrixMode(GL_TEXTURE)

glLoadIdentity()

glScalef(size, 1, 1)

glMatrixMode(GL_MODELVIEW)

glScalef(size, 1, 1)

|

|

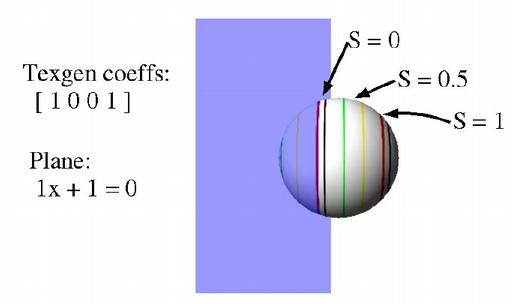

Use the function glTexGen, and glEnable modes GL_TEXTURE_GEN_S & GL_TEXTURE_GEN_T.

planeCoefficients = [ 1, 0, 0, 0 ] glTexGeni(GL_S, GL_TEXTURE_GEN_MODE, GL_OBJECT_LINEAR) glTexGenfv(GL_S, GL_OBJECT_PLANE, planeCoefficients) glEnable(GL_TEXTURE_GEN_S) glBegin(GL_QUADS) glVertex3f(-3.25, -1, 0) glVertex3f(-1.25, -1, 0) glVertex3f(-1.25, 1, 0) glVertex3f(-3.25, 1, 0) glEnd()

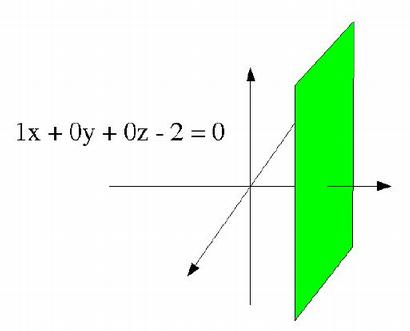

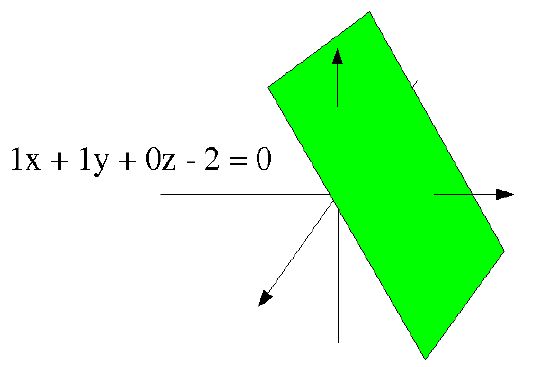

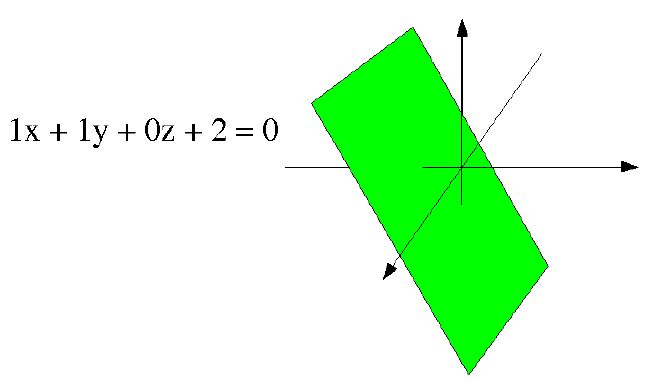

A plane in 3 dimensions can be defined by the equation:

Ax + By + Cz + D = 0

This is an implicit formula - it defines a plane as the set of points that satisfy the equation. In other words, it serves as a test to determine whether or not a particular point [x y z] is on the plane or not.

Another interpretation is that the value of

Ax + By + Cz + D

is proportional to the distance of the point [x y z] from the plane.

The vector [A B C] is normal (perpendicular) to the plane.

Thus, it defines the plane's orientation.

e.g.: A=1, B=0, C=0

defines a plane perpendicular to the X axis, or parallel to the

plane of the Y & Z axes.

The plane equation in this case would reduce to x = -D.

D controls the distance of the plane from the origin. If [A B C] is a unit vector, then D is equal to the distance from the plane to the origin.

Note that if, for a given [x y z], Ax + By + Cz + D = 0, then 2Ax + 2By + 2Cz + 2D = 0, and in general:

NAx + NBy + NCz + ND = 0

In other words, multiplying the four coefficients by the same constant N will still define the same plane.

However, the value of NAx + NBy + NCz + ND will be N times the value of Ax + By + Cz + D. This is useful in texture coordinate generation.

The texture generation mode can be one of

When the mode is GL_OBJECT_LINEAR, the texture coordinate is calculated using the the plane-equation coefficients that are passed via glTexGenfv(GL_S, GL_OBJECT_PLANE, planeCoefficients).

The coordinate (S in this case) is computed as:

S = Ax + By + Cz + D

using the [x y z] coordinates of the vertex (the values passed to glVertex).

If [A B C] is a unit vector, this means that the texture coordinate S is equal to the distance of the vertex from the texgen plane.

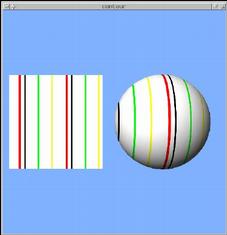

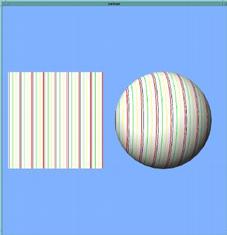

We can change how frequently the texture repeats (i.e. scale the texture) by multiplying the texgen coefficients by different amounts.

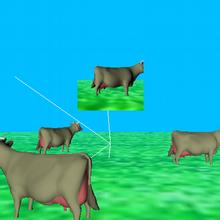

e.g., changing the coefficients from [1 0 0 0] to [3.5 0 0 0] changes

the results of contour.cpp

from:

to:

to:

GL_EYE_LINEAR works similarly, with the coefficients passed via glTexGenfv(GL_S, GL_EYE_PLANE, planeCoefficients).

The difference is that GL_OBJECT_LINEAR operates in "object coordinates", while GL_EYE_LINEAR works in "eye coordinates".

This means that the texture coordinates computed with GL_OBJECT_LINEAR depend solely on the values passed to glVertex, and do not change as the object (or camera) moves via transformations.

The texture coordinates computed with GL_EYE_LINEAR use the positions of vertices after all modeling & viewing transformations - i.e. they use the positions of the vertices on the screen.

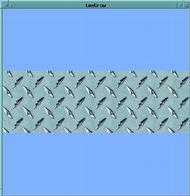

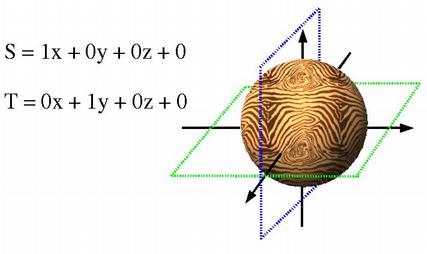

Both S & T coordinates can use texgen, to apply 2D textures with automatic coordinates.

This is done by making similar glTexGen calls for the T coordinate (but using a different plane).

SplaneCoefficients = [ 1, 0, 0, 0 ] glTexGeni(GL_S, GL_TEXTURE_GEN_MODE, GL_OBJECT_LINEAR) glTexGenfv(GL_S, GL_EYE_PLANE, SplaneCoefficients) glTexGenfv(GL_S, GL_OBJECT_PLANE, SplaneCoefficients) TplaneCoefficients = [ 0, 1, 0, 0 ] glTexGeni(GL_T, GL_TEXTURE_GEN_MODE, GL_OBJECT_LINEAR) glTexGenfv(GL_T, GL_EYE_PLANE, TplaneCoefficients) glTexGenfv(GL_T, GL_OBJECT_PLANE, TplaneCoefficients)

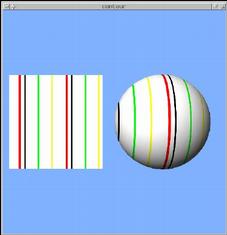

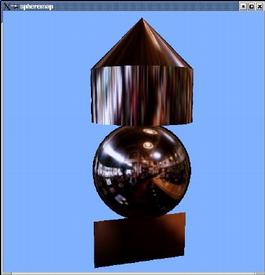

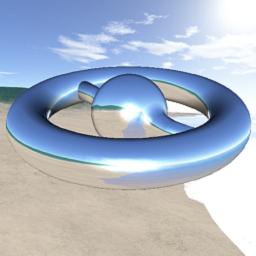

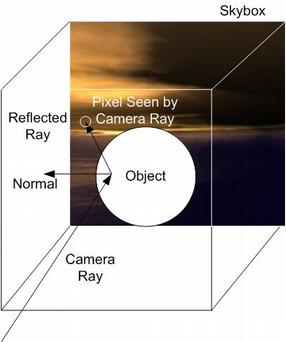

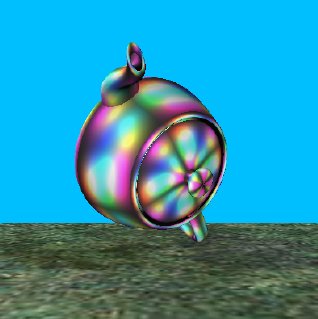

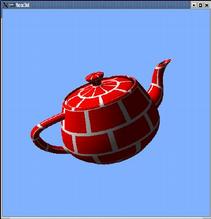

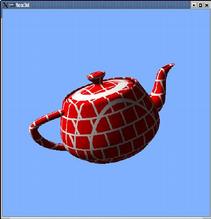

Sphere mapping, a.k.a. reflection mapping or environment mapping, is a texgen mode that simulates a reflective surface.

It is enabled by setting the texgen mode to GL_SPHERE_MAP.

There are no additional parameters.

glTexGeni(GL_S, GL_TEXTURE_GEN_MODE, GL_SPHERE_MAP) glTexGeni(GL_T, GL_TEXTURE_GEN_MODE, GL_SPHERE_MAP)

| texture:

|

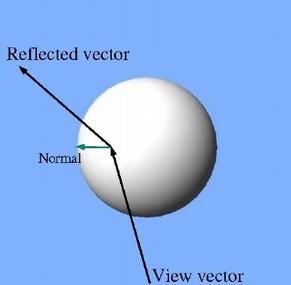

A sphere-mapped texture coordinate is computed by taking the vector from the viewpoint (the eye), and reflecting it about the surface's normal vector. The resulting direction is then used to look up a point in the texture. The texture image is assumed to be warped to provide a 360 degree view of the environment being reflected.

If you don't care about realistically exact reflections, though, any texture can be used.

| texture:

|

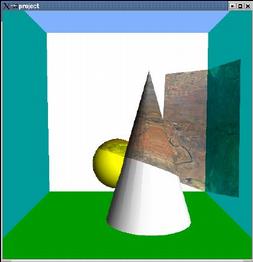

Cube mapping is a newer method of reflection mapping.

The reflection texture is considered to be 6 2D textures forming a cube that surrounds the scene.

This allows for more correct reflection behavior as the camera moves about.

Projected textures use 4 texture coordinates: S, T, R, & Q.

Projected textures use 4 texture coordinates: S, T, R, & Q.

Texture projection works similarly to a 3D rendering projection.

A rendering projection takes the (X, Y, Z, W) coordinates of vertices, and converts them into (X, Y) screen positions.

A projection loaded in the GL_TEXTURE matrix can take (S, T, R, Q) texture coordinates and transform them into different (S, T) texture coordinates.

Projecting a texture thus involves 2 major steps:

Normally, a texture is defined using data in main memory - an image that was read from a file, or created algorithmically.

It is also possible to take image data from the frame buffer and load it into a texture.

glCopyTexImage2D(GL_TEXTURE_2D, 0,

GL_RGB, x, y,

width, height, 0)

copies data from part of the frame buffer into the active texture.

With glCopyTexImage, we can create a "virtual camera".

Frame-buffer textures can also be used to create a warped view.

|

|

| 2D Texture | 3D Texture |

|---|

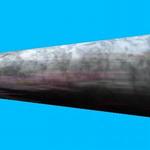

A 2D texture is like a photograph pasted on the surface of an object.

A 3D texture can make an object look as if it were carved out of a solid block of material.

A 3D texture can be thought of as a stack of 2D images, filling a 3D cube.

3D texturing adds an R texture coordinate to the existing S & T coordinates.

3D textures are used in scientific visualization for volume rendering.

A dense stack of quads are rendered, running through the volume.

Alpha transparency is used to see into the volume.

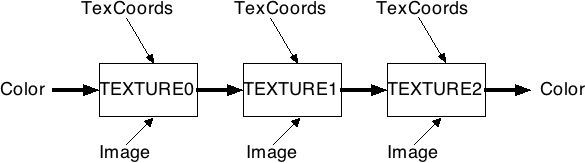

Multitexturing allows the use of multiple textures at one time.

An ordinary texture combines the base color of a polygon with color from the texture image. In multitexturing, this result of the first texturing can be combined with color from another texture.

|

| -> |  |

Multitexturing involves 2 or more "texture units".

Most current hardware has from 2 to 8 texture units.

Each unit has its own texture image and environment.

Each vertex has distinct texture coordinates for each texture unit.

A technique to produce advanced lighting effects using textures, rather than (or in addition to) ordinary OpenGL lighting.

OpenGL lighting is only calculated at vertices. The results are interpolated

across a polygon's face.

For large polygons, this can yield visibly wrong

results.

Also, OpenGL lighting does not produce shadows.

A simple way to do this is to include shadows & lighting in the texture applied to an object.

Some modeling packages can do this automatically (called "baking" the shadows into the textures, in at least one case).

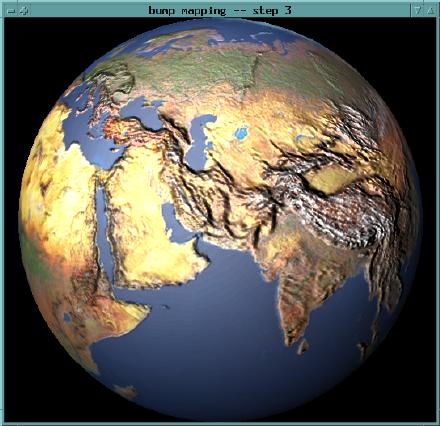

Texture is used to modify normals, which affect lighting, on a per-pixel basis

Yields the appearance of a bumpy surface, without large amounts of geometry